WWDC 2024: Siri Could Get an AI Glow Up to Better Compete With ChatGPT and Gemini

[ad_1]

We now live in a world where virtual assistants can participate in seamless (and even flirtatious) conversation with people. But Apple’s virtual assistant, Siri, struggles with some of the basics.

For example, I asked Siri when the Olympics were going to be held this year, and she quickly spat out the correct dates for the summer games. When I followed that up with “Add it to my calendar,” the virtual assistant responded imperfectly with “What should I call it?” The answer to that question would be obvious to us humans. Apple’s virtual assistant was lost. Even when I answered “Olympics,” Siri responded, “When should I schedule it for?”

Siri tends to hesitate because she lacks contextual awareness, which limits her ability to follow a conversation like a human. That could change as early as June 10, the first day of Apple’s annual day Worldwide Developers Conference. The iPhone the manufacturer is expected to unveil major updates with its upcoming mobile operating system, which is likely to be named iOS 18with significant changes reportedly for Siri.

Apple’s virtual assistant made waves when it debuted with iPhone 4S in 2011. For the first time, people could talk to their own telephones and get a human response. some android phones it offered basic voice search and voice actions before Siri, but they were more command-based and widely considered less intuitive.

Siri represents a leap forward in voice interaction and lays the groundwork for subsequent voice assistants such as Amazon’s Alexa, The Google Assistant and even OpenAI’s ChatGPT and Google’s Gemini chatbots.

Move over Siri, multimodal assistants are here

Although Siri impressed people with its voice experience in 2011, its capabilities are seen by some as lagging behind those of their peers. Alexa and Google Assistant are skilled understanding and answering questions, both expanded into smart homes in ways other than Siri. It just seems like Siri hasn’t reached its full potential, even though its rivals have received similar criticism.

In 2024, Siri also faces a dramatically different competitive landscape that is enhanced by generative AI. In recent weeks, OpenAI, Google and Microsoft have unveiled a new wave of futuristic virtual assistants with multimodal capabilities that pose a competitive threat to Siri. According to NYU professor Scott Galloway of a a recent episode of his podcastthese updated chatbots are poised to be the “killers of Alexa and Siri”.

Scarlett Johansson and Hawkin Phoenix attended its premiere at a film festival in 2013. Fast forward to 2024 and Johansson accused OpenAI of reproducing her voice for its chatbot without her permission.

Earlier this month, OpenAI unveiled its latest AI model. The announcement highlighted how far virtual assistants have come. In its demo in San Francisco, OpenAI showed how GPT-4o can hold two-way conversations in even more human ways, along with the ability to change tone, make sarcastic remarks, whisper, and even flirt. The technology demonstrated quickly drew comparisons to Scarlett Johansson’s character in 2013 Hollywood Drama She, in which a lonely writer falls in love with his female-sounding virtual assistant, voiced by Johansson. After the demonstration of GPT-4o, the American actor accused OpenAI of creating the virtual assistant voice which sounded “eerily similar” to hers, without her permission. Open AI said the voice was never intended to resemble Johansson’s.

The controversy appears to have sidelined some of GPT-4o’s features, such as its own multimodal capabilities, meaning the AI model can understand and respond to inputs beyond text, including photos, spoken language and even video. In practice, GPT-4o can talk to you about a photo you’re showing (by uploading media), describe what’s happening in a video, and discuss a news article.

Read more: Scarlett Johansson ‘furious’ over OpenAI chatbot imitating ‘her’ voice

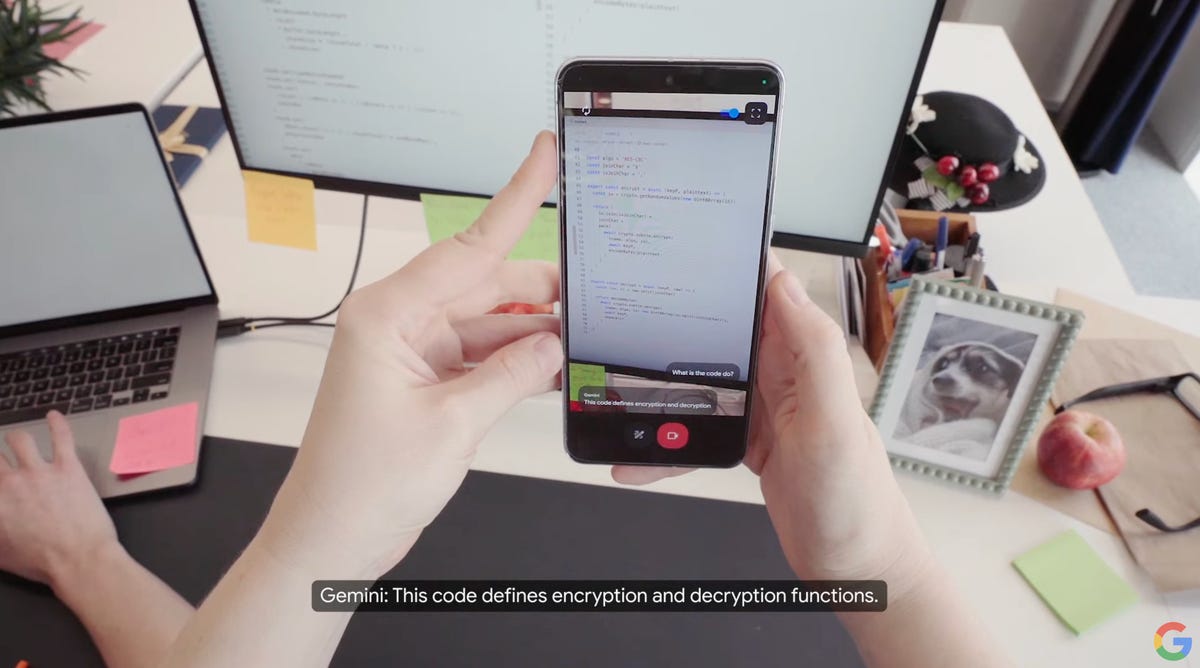

A day after the OpenAI preview, Google showed off its own multimodal demo showcasing it Project Astra, a prototype that the company touted as “the future of AI assistants.” In a demo video, Google describes how users can show Google’s virtual assistant their surroundings using their smartphone’s camera, and then proceed to discuss objects in their environment. For example, the person interacting with Astra at Google’s London office asked Google’s virtual assistant to identify an object that was making a sound in the room. In response, Astra pointed to the speaker sitting at a desk.

Google demonstrated Astra on a phone and also on camera glasses.

The Google Astra prototype can not only make sense of its surroundings, but also remember details. When the narrator asked where they left their glasses, Astra was able to say where they were last seen, answering “On the corner of the desk next to a red apple.”

The race to create brilliant virtual assistants doesn’t end with OpenAI and Google. Elon Musk’s artificial intelligence company xAI is making progress on making its Grok chatbot multimodal, according to public docs for developers. In May, Amazon said it was working on giving Alexa, its decade-old virtual assistant, an AI-powered upgrade.

Will Siri go multimodal?

Multimodal conversational chatbots are currently the latest in AI assistants, potentially offering a window into the future of how we navigate our phones and other devices.

Apple doesn’t yet have a digital assistant with multimodal capabilities, which puts it behind the curve. However, the iPhone manufacturer published a study on the subject. It was discussed in October Por, a multimodal AI model that can understand what’s happening on your phone’s screen and perform a set of tasks based on what it sees. IN paper, researchers are investigating how Ferret can identify and report what you’re looking at and help you switch between apps, among other things. The research points to a possible future where the way we use our iPhones and other devices changes entirely.

Apple is exploring the functionality of a multimodal AI assistant called Ferret. In this example, the assistant helps the user navigate an application, with Ferret performing basic and advanced tasks, such as describing a screen in detail.

Where Apple can stand out is in terms of privacy. The iPhone maker has long championed privacy as a core value in product and service design, and will bill the new version of Siri as a more personal alternative to its competitors. According to New York Times. Apple is expected to achieve this privacy goal by handling Siri requests on the device and turning to the cloud for more complex tasks. They will be processed in data centers with Apple-made chips, according to the Wall Street Journal report.

On the chatbot side, Apple is close to finalizing a deal with OpenAI to potentially bring ChatGPT to the iPhone. According to Bloomberg, as a possible indication that Siri will not compete directly with ChatGPT or Gemini. Instead of doing things like writing poetry, Siri will refine the tasks it can already do and become better at them. According to New York Times.

As part of a WWDC 2012 demo, Scott Forstall, Apple’s senior vice president of iOS software, asked Siri to look up a baseball player’s batting average.

How will Siri change? All eyes on Apple’s WWDC

Traditionally, Apple has been deliberately slow to market, preferring to take a wait-and-see approach to emerging technologies. This strategy has often worked, but not always. For example, the iPad wasn’t the first tablet, but to many, including CNET editors, it is the best tablet. On the other hand, Apple’s HomePod smart speaker hit the market a few years after Amazon Echo and Google Home, but it never reached the market share of its competitors. A more recent example on the hardware side is folding phones. Apple is the only major backup. Every major competitor – Google, Samsung, Honor, Huawei and even lesser known companies like phantom – beat Apple to the limit.

Historically, Apple has taken the approach of updating Siri at intervals, says Avi Greengart, lead analyst at Techsponential.

“Apple has always been more programmatic about Siri than Amazon, Google or even Samsung,” Greengart said. Apple seems to be adding knowledge to Siri in droves—sports one year, entertainment the next.

With Siri, Apple is expected to play catch-up rather than break new ground this year. Still, Siri is likely to be a major focus of Apple’s upcoming operating system, iOS 18, which is rumored to bring new AI features. Apple is expected to show additional AI integrations into existing apps and features, including Notes, Emoji, photo editing, messaging and email, According to Bloomberg.

Siri can answer health-related questions on the Apple Watch Series 9 and Ultra 2.

As for Siri, this year is expected to become a smarter digital assistant. Apple is reportedly training its voice assistant on large language patterns to improve its ability to answer questions with greater accuracy and sophistication, According to the October issue of Mark German’s Bloomberg newsletter Power On.

The integration of large language models, as well as the technology behind ChatGPT, are poised to transform Siri into a more context-aware and powerful virtual assistant. This would allow Siri to understand more complex and more nuanced questions and also provide accurate answers. This year iPhone 16 The range is also expected to come with more memory to support new Siri capabilities, According to New York Times.

Read more: What is LLM and how does it relate to AI Chatbots?

“My hope is that Apple can use generative AI to give Siri the ability to feel more like an attentive assistant that understands what you’re trying to ask, but uses data-driven response systems that are tied to data Techsponential’s Greengart told CNET.

Siri could also improve at multi-tasking. September one report from The Information details how Siri can respond to simple voice commands for more complex tasks, such as turning a a set of GIF images and then send it to one of your contacts. This would be a significant step forward in Siri’s capabilities.

“Apple also defines how iPhone apps work, so it has the ability to allow Siri to work in different apps with the developer’s permission — potentially opening up new opportunities for more intelligent Siri to securely perform tasks on your behalf,” Greengart said.

Watch this: If Apple makes Siri like ChatGPT or Gemini, I’m all for it

Editor’s note: CNET used an AI engine to help create several dozen stories that were labeled accordingly. The note you’re reading is attached to articles that deal essentially with the topic of AI, but were created entirely by our expert editors and writers. For more see our AI Policy.

[ad_2]